As a popular object storage service, Amazon S3 allows you to store data in buckets. However, in most cases, some data becomes irrelevant and less frequently accessed over time. If you leave things as they are, the data will continue to take up space, leading to extra storage costs, not to mention performance inefficiencies.

The good news is that Amazon S3 provides various storage classes for different access patterns that align with your storage performance and cost requirements. Besides, by configuring S3 lifecycle policies, you can transition objects to other storage classes or permanently delete them after a specific period.

This post will guide you through AWS S3 storage classes and introduce lifecycle policies to help you develop a cost-optimized and effective data management process.

S3 Standard & Express One Zone for Frequently Accessed Objects

AWS offers the S3 Standard storage class for frequently accessed objects and the S3 Express One Zone for the most frequently accessed objects requiring high-performance storage. As its name implies, the S3 Express One Zone stores objects in only one AWS Availability Zone, making it a lower-cost option than the S3 Standard.

However, in case of any problem with that AZ, your data may be lost or damaged, since there is no data replication across multiple AZs. Meanwhile, with the S3 Standard storage class, the objects are stored across several Availability Zones. So, the S3 Standard storage class offers more resiliency than S3 Express One Zone. Therefore, we advise you to choose S3 Express One Zone only for your reproducible infrequently accessed data, so as not to take any risk.

What are Your Options for Infrequently Accessed Objects?

Objects you don’t need frequently but still require millisecond access can be transferred to the S3 Standard-IA (Infrequent Access) or S3 One-Zone IA storage class. These classes offer the same durability and low latency as the S3 Standard and S3 Express One Zone storage classes, respectively.

S3 Standard-IA and S3 One-Zone IA both offer lower cost than their frequently accessed storage classes. However, there is an extra retrieval fee when the object is accessed. So, you should only use them if the object is infrequently accessed, a few times a month.

There is a minimum duration for S3 Standard-IA and S3 One Zone-IA storage classes, which is 30 days. If you delete the object or move it to another class within this period, you still pay the 30-day full price in that class in addition to the costs of any class for the remaining days.

Besides, the minimum object size is 128 KB in S3 Standard-IA and S3 One Zone-IA. So, if the object is smaller than 128 KB, you still pay the 128 KB cost. Hence, these classes may not be feasible for small objects.

S3 Glacier Storage Classes: ‘Freeze’ Your Rarely Used Objects in the Glacier

Until now, we have discussed storage options for frequently or infrequently accessed objects. But what about the rarely accessed data you must keep for archival purposes, regulatory requirements, etc.? Amazon S3 Glacier storage classes are built for this purpose. You can choose among three archival storage classes offering different retrieval times and storage costs: S3 Glacier Instant Retrieval, S3 Glacier Flexible Retrieval, and S3 Glacier Deep Archive. Let’s analyze each S3 Glacier storage class.

S3 Glacier Instant Retrieval

Suppose you have rarely accessed data but still need immediate access. The S3 Glacier Instant Retrieval storage class offers millisecond retrieval time, the same as S3 Standard or S3 Standard-IA, with much lower storage costs. It can be suitable for cases like image hosting, storing health records that aren’t used generally but need immediate access during a doctor’s appointment, or archived photos or videos that sometimes require instant access.

So, if you have data you access only once per quarter, the S3 Glacier Instant Retrieval storage class can save you a lot on storage costs. S3 Glacier Instant Retrieval has a minimum storage duration of 90 days.

S3 Glacier Flexible Retrieval

This storage class is ideal for archiving data you access semi-annually. As its name implies, the S3 Glacier Flexible Retrieval storage class offers flexible retrieval times from minutes to hours, unlike the S3 Glacier Instant Retrieval. However, it has the same minimum storage duration as S3 Glacier Instant Retrieval, which is 90 days.

When you restore the object, you can choose from three retrieval tiers.

- The expedited retrieval option generally restores an object within 1 to 5 minutes.

- Meanwhile, the standard retrieval takes 3 to 5 hours.

- The third option is bulk retrieval, which is free, but you must wait for 5 to 12 hours to access your objects.

So, choosing the one that fits your specific use case is your call.

S3 Glacier Flexible Retrieval is usually recommended for backup or disaster recovery because it allows you to archive large sets of data and retrieve them at a lower cost. However, once the objects are archived, you cannot access them in real time. You must submit a restore request to access stored objects and wait until it completes.

S3 Glacier Deep Archive

Finally, let’s discuss the most cost-effective storage class, and my favorite: S3 Glacier Deep Archive. As the name suggests, this storage option is designed to store rarely accessed data deep down the ‘glacier’. It is especially recommended for meeting regulatory requirements or long-term archiving, as it allows storing large amounts of data for many years.

Like S3 Glacier Instant Retrieval, real-time access is unavailable for the S3 Glacier Deep Archive. So, you must create a restore request to access your objects. After that, you can choose one of the two retrieval tiers for your use case.

- The standard retrieval option generally retrieves your objects within 12 hours.

- Whereas bulk retrieval can take up to 48 hours.

Also, the S3 Glacier Deep Archive differs from other S3 Glacier storage classes with its minimum storage duration of 180 days.

As you will read in my use case below, you can also use Amazon S3 Glacier Deep Archive for backups and disaster recovery if your business recovery time objective (RTO) allows you to wait up to 72 hours to restore from your backups.

S3 Intelligent Tiering: What if you don’t know how often your objects are accessed?

You may have objects whose access patterns you can’t predict. In this case, you can choose the S3 Intelligent-Tiering storage class for automatic cost savings. Once you use this class, AWS will automatically monitor your objects’ access patterns and transfer them to the most effective access tier for you. The objects will be moved between three access tiers based on the access frequency: Frequent Access, Infrequent Access, and Archive Instant Access.

- When first transitioning to the S3 Intelligent Tiering storage class, the objects are stored in the Frequent Access tier by default.

- Then, if an object has no access for 30 consecutive days, it will be moved to the Infrequent Access tier.

- Lastly, the objects not accessed for 90 days will be moved to the Archive Instant Access tier. These tiers offer the same low latency level as the S3 Standard while helping you save on storage costs.

Amazon S3 also offers two optional archiving tiers, Archive Access and Deep Archive Access, which you can activate under the S3 Intelligent Tiering storage class. They correspond to S3 Glacier Flexible Retrieval and S3 Glacier Deep Archive storage classes, respectively.

Automating S3 Storage Management with Lifecycle Policies

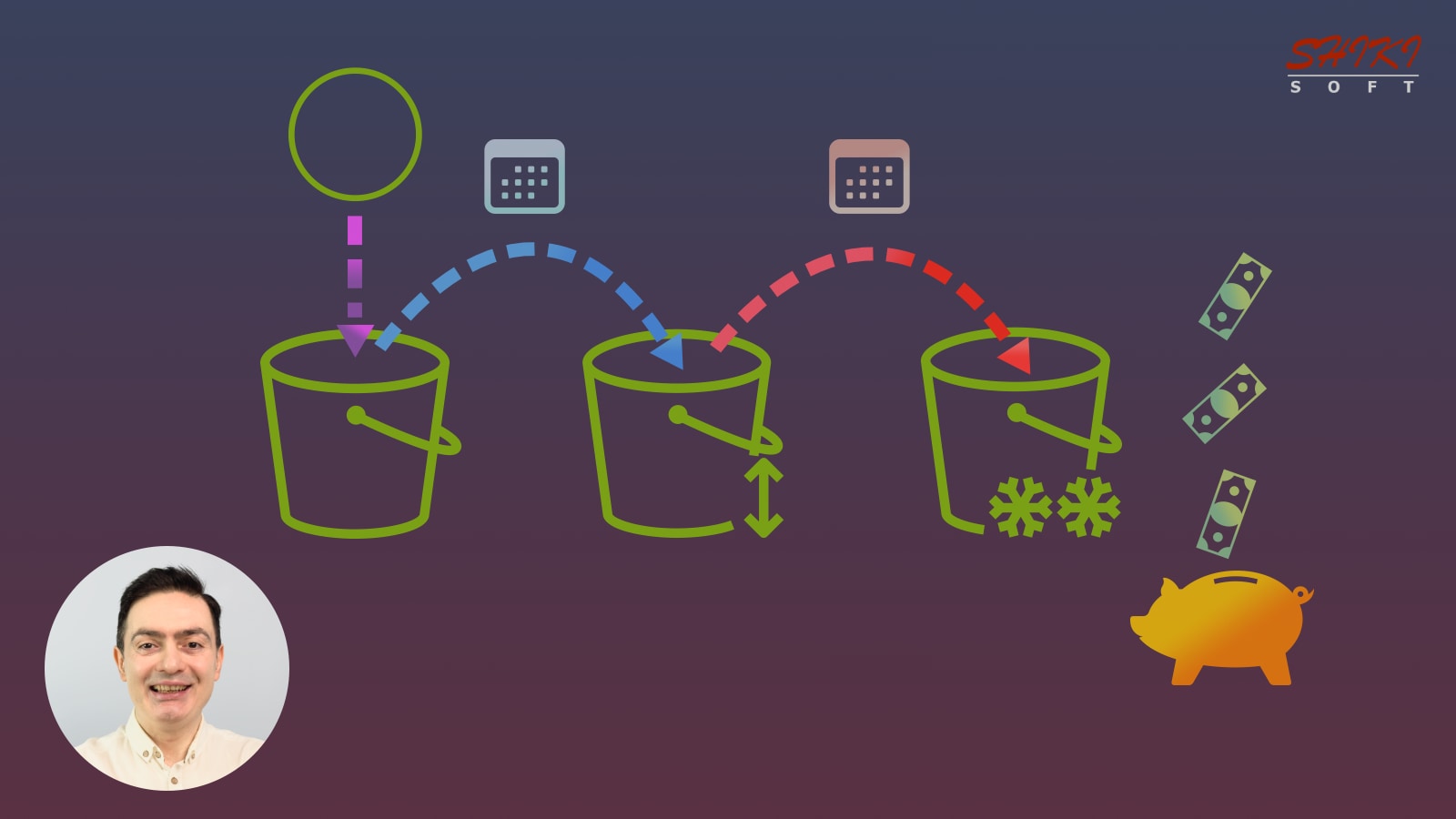

If your object’s access pattern changes over time, you can automatically move it to lower-cost classes and eventually delete it when it is no longer relevant to keep. By configuring S3 lifecycle policies, you can transition objects to different storage classes or permanently delete them after a specific period.

An S3 lifecycle policy consists of rules that will apply to your objects throughout their lifetime. You can set up a transition action to automatically move an object to a different storage class after a specified period. Also, you can define a delete action to delete an object permanently.

Its primary difference from S3 Intelligent Tiering is that it doesn’t take objects’ access patterns into account, and you can customize the duration between the transition actions as long as it aligns with the minimum storage duration. For example,

- You can move an object automatically to Standard-IA 45 days after creation. You can’t move an object ot Standard-IA or One Zone-IA before 30 days in S3 Standard.

- However, Standard-IA has a 30-day minimum storage duration. Hence, you can define another rule to move it to S3 Glacier storage classes after an additional 30 days (total: 75 days).

- If you don’t have to keep the object, you can just delete it without moving to S3 Glacier storage classes. You can align the rule according to your use case.

My Use Case: Backup Storage for Final Cut Pro X Libraries

Let me give you another example. As you may already know, I have online courses on Udemy. While producing these courses, I use Final Cut Pro X to edit my videos. So, I occasionally take backups of my Final Cut libraries and upload them to S3.

These backup files are large and take up GBs of storage space. I only access these files if there is a problem on my local disk drives for disaster recovery. Keeping them in the S3 Standard class would cost me a lot, considering the new versions I continue to upload. So, I needed a cost-effective solution for my backup storage.

As a solution, I defined an S3 lifecycle rule to move the object to S3 Glacier Deep Archive storage class on day 0, as I don’t need to access it unless there is a disaster in my office. In that unlikely scenario, I can wait up to 72 hours to restore the backup library.

Besides, to keep the storage costs down even more, another rule automatically deletes any previous versions from the S3 Glacier Deep Archive after 6 months. It is because if I didn’t need a previous version in 6 months, I almost certainly won’t need it later. However, I always keep the latest version in the S3 Glacier Deep Archive. This way, I decreased my backup storage costs on S3 by 10 times.

Conclusion

Amazon S3 provides different storage classes to manage your data storage efficiently and cost-effectively. S3 Lifecycle Management is also an automated way to move your objects between different storage classes or permanently expire them. In this post, we analyzed the main features of each S3 storage class to help you learn how to use S3 lifecycle policies for your unique cases. I hope you can benefit from it.

Thanks for reading, and see you in our other posts.

References